Prompt Versioning: Lifecycle Guide for Scalable AI

Mastering Prompt Versioning and Lifecycle Management: A Practical Guide

Prompt versioning and lifecycle management is the systematic process of creating, testing, deploying, monitoring, and retiring prompts for Large Language Models (LLMs). Much like the software development lifecycle (SDLC), it treats prompts not as simple text strings, but as critical application components that require rigorous control. In the early days of experimentation, a simple text file might suffice, but as AI applications scale, this approach quickly becomes unmanageable. Implementing a formal lifecycle ensures consistency, traceability, and reliability in your AI systems. It allows teams to track changes, collaborate effectively, evaluate performance over time, and roll back to previous versions if a new prompt underperforms, turning chaotic experimentation into a disciplined engineering practice.

What is Prompt Lifecycle Management (PLM)?

At its core, Prompt Lifecycle Management (PLM) is a structured framework for overseeing a prompt’s entire journey, from its initial conception to its eventual retirement. It’s about moving beyond the “edit and pray” method of prompt engineering and adopting a more professional, predictable methodology. Thinking your work is done once a prompt is written is a common pitfall. In reality, a prompt’s life has just begun. Effective PLM acknowledges that prompts are dynamic assets that must adapt to new model versions, changing user needs, and evolving business goals.

So, what does this lifecycle actually look like in practice? It can be broken down into several distinct stages, each with its own set of goals and activities:

- Creation & Design: The initial phase where the prompt’s purpose, target audience, and desired output format are defined. This involves brainstorming, requirement gathering, and crafting the first draft.

- Testing & Evaluation: The prompt is rigorously tested against a “golden dataset” of inputs to measure its accuracy, coherence, and safety. A/B testing against other prompt variations is common here.

- Deployment: Once approved, the prompt is moved into a production environment. This should be a controlled process, often integrated into a CI/CD pipeline.

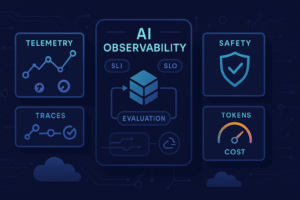

- Monitoring: In production, the prompt’s performance is continuously tracked. Key metrics include latency, cost per generation, user satisfaction scores, and the rate of successful outputs.

- Iteration & Optimization: Based on monitoring data and user feedback, the prompt is refined and improved. This kicks off a new cycle of testing and deployment.

- Retirement: When a prompt becomes obsolete or is replaced by a significantly better version, it is formally archived and removed from production.

The Pillars of Effective Prompt Versioning

If PLM is the overall strategy, then prompt versioning is the foundational tactic that makes it all possible. Simply saving files as `prompt_v1.txt` or `prompt_final_final.txt` is a recipe for disaster. It offers no insight into why a change was made, who made it, or how it impacted performance. A robust versioning system provides the traceability needed to manage prompts with confidence, especially in a team environment. The goal is to create an auditable history for every single prompt.

The principles of software version control, particularly those embodied by systems like Git, are perfectly suited for this challenge. By storing prompts in a Git repository, you immediately gain powerful capabilities. Every change can be tied to a specific commit with a descriptive message, explaining the rationale behind the update. You can use branches to experiment with new prompt ideas without affecting the stable, production version. When a new version is ready for release, you can merge it and create a tag (e.g., `v2.1.0`), providing a clear reference point. This approach transforms prompt management from a guessing game into a transparent, collaborative process.

However, versioning the prompt text alone is not enough. To truly understand a prompt’s behavior, you must also version its surrounding context. This means tracking critical metadata alongside each version:

- Model & Parameters: The exact model used (e.g., `gpt-4-turbo-2024-04-09`) and the settings applied (temperature, top_p, max_tokens). A prompt that works brilliantly with one model might fail with another.

- Performance Metrics: Objective data from your evaluation stage, such as accuracy scores, latency, or cost.

- Changelog: A human-readable summary of what was changed and why. For example, “Added XML tags to improve structured output” is far more useful than a generic commit message.

- Dependencies: Information about any external tools, APIs, or knowledge bases the prompt relies on.

Tools and Platforms to Streamline Your Workflow

As you formalize your prompt management process, you’ll inevitably face a “build vs. buy” decision for your tooling. For small projects or individual developers, a “build” approach using existing tools can be highly effective. This DIY stack often combines a few key components to create a surprisingly powerful system. The cornerstone is a version control system like Git, hosted on platforms such as GitHub or GitLab. This handles the core versioning and collaboration.

To complement Git, teams often use databases or even sophisticated spreadsheets to track the rich metadata and performance metrics associated with each prompt version. For automation, CI/CD (Continuous Integration/Continuous Deployment) pipelines, using tools like GitHub Actions or Jenkins, can be configured to automatically run evaluation tests whenever a prompt is updated. This ensures that a poorly performing prompt never makes it into production. This stack gives you complete control but requires significant setup and maintenance.

For larger teams or organizations where efficiency is paramount, specialized “buy” solutions are emerging. These are often called Prompt Management Platforms or LLMops tools (e.g., Vellum, PromptLayer, Langfuse). These platforms provide an integrated, end-to-end solution for the entire prompt lifecycle. They offer features like a centralized prompt registry, built-in A/B testing environments, collaborative editing interfaces, and detailed performance dashboards. By unifying versioning, testing, and monitoring into a single workflow, they can dramatically accelerate development and reduce the operational overhead of managing prompts at scale.

Best Practices for a Scalable Prompt Management Strategy

Building a robust PLM strategy requires more than just tools; it requires a shift in mindset and a commitment to best practices. Perhaps the most critical practice is establishing a centralized prompt registry. This registry acts as the single source of truth for all prompts used in your applications. It prevents the dreaded “shadow engineering,” where different teams use slightly different, untracked versions of the same prompt, leading to inconsistent user experiences and duplicated effort. Every production prompt should live in this registry, complete with its full version history and metadata.

Next, you must implement clear governance and collaboration protocols. Who has the authority to approve changes to a critical, customer-facing prompt? Define roles and responsibilities—such as Prompt Engineer, QA Tester, and Product Manager—and establish a mandatory review process. For high-stakes prompts, a “four-eyes principle” (requiring at least two people to review a change) can prevent costly errors. This structure ensures that prompt updates are deliberate and well-vetted, not rushed and haphazard.

Finally, embrace automated regression testing. Every time you consider deploying a new prompt version or upgrading the underlying LLM, you must answer a crucial question: “Does this change negatively impact any existing functionality?” To answer this, you need a suite of automated tests that run your prompt against a standard set of inputs and validate the outputs. This “golden dataset” acts as a safety net, catching regressions before they affect users. Without automated testing, every update is a gamble; with it, every update is a calculated, evidence-backed improvement.

Conclusion

Prompt versioning and lifecycle management are no longer optional disciplines for teams serious about building reliable, scalable AI products. By moving away from ad-hoc text files and adopting a structured engineering approach, you transform prompts from fragile art into robust assets. Treating prompts like code—with rigorous version control, automated testing, continuous monitoring, and clear governance—is the definitive path forward. This methodology not only mitigates risk and improves collaboration but also unlocks the full potential of your AI applications. As prompt engineering matures as a field, mastering these practices will be a key differentiator between teams that struggle with inconsistency and those that innovate with confidence and precision.

Frequently Asked Questions

Why can’t I just save my prompts in a Google Doc?

While a shared document is great for initial brainstorming, it falls short for production systems. It lacks a formal version history, audit trails, the ability to branch for experiments, and direct integration with testing and deployment pipelines. For anything beyond solo experimentation, a structured system like Git is necessary to prevent errors and ensure traceability.

What is “prompt drift”?

Prompt drift is the gradual degradation of a prompt’s performance over time. This can happen for two main reasons: the underlying LLM is updated by its provider (even minor updates can change behavior), or the patterns in user input change. A robust Prompt Lifecycle Management process, especially the monitoring stage, is essential for detecting and correcting prompt drift before it impacts users.

How often should I review my production prompts?

There’s no single answer, but a good strategy is based on both a schedule and performance triggers. High-impact prompts should be reviewed on a regular schedule, such as quarterly or whenever a major new version of the underlying LLM is released. More importantly, you should set up automated monitoring and alerts. If a prompt’s success rate drops or its cost spikes, that should trigger an immediate review, regardless of the schedule.