Feedback Loops in LLM Apps: Drive Continuous Improvement

Feedback Loops in LLM Apps: From Thumbs-Up Buttons to Continuous Improvement

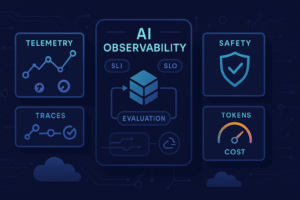

Feedback loops in large language model (LLM) applications represent the systematic process of collecting, analyzing, and implementing user input to refine model performance and user experience. These mechanisms range from simple binary reactions like thumbs-up buttons to sophisticated multi-dimensional evaluation systems that capture nuanced user preferences. As LLM applications become increasingly embedded in business workflows and consumer products, establishing robust feedback loops has emerged as a critical success factor. Effective feedback systems transform static AI deployments into dynamic, self-improving platforms that adapt to real-world usage patterns, identify edge cases, and continuously enhance output quality while maintaining alignment with user expectations and organizational objectives.

Understanding the Feedback Loop Architecture in LLM Applications

The architecture of feedback loops in LLM applications consists of several interconnected components that work together to capture, process, and leverage user input. At the foundation lies the data collection layer, where user interactions are recorded through various mechanisms including explicit feedback buttons, implicit behavioral signals, and contextual metadata. This layer must balance comprehensiveness with user privacy, ensuring that valuable insights are gathered without creating friction in the user experience or violating data protection regulations.

Beyond simple collection, the processing and analysis pipeline transforms raw feedback into actionable intelligence. This involves aggregating responses, identifying patterns, filtering noise and malicious inputs, and correlating feedback with specific model behaviors or prompt structures. Modern LLM feedback systems employ both automated analysis and human-in-the-loop review, particularly for edge cases or responses that receive conflicting evaluations. The challenge lies in creating systems that can operate at scale while maintaining the granularity needed for meaningful improvements.

The final component is the implementation mechanism that closes the loop by feeding insights back into the system. This might involve retraining models on curated datasets, adjusting prompt engineering strategies, updating retrieval-augmented generation (RAG) knowledge bases, or modifying safety filters and guardrails. The sophistication of this component determines whether feedback truly drives improvement or merely accumulates as unused data. Organizations must establish clear workflows for triaging feedback, prioritizing improvements, and measuring the impact of changes to validate that the loop is functioning effectively.

Explicit Feedback Mechanisms: Design and Implementation Strategies

Explicit feedback mechanisms provide users with direct ways to evaluate LLM outputs, with the classic thumbs-up/thumbs-down binary system serving as the most recognizable implementation. While seemingly simple, even binary feedback requires thoughtful design decisions. When should the buttons appear? Should users be able to change their vote? What happens after a user clicks—should there be a confirmation, additional questions, or silent acceptance? Research shows that friction-free feedback collection dramatically increases participation rates, but too little context can reduce the signal quality of the data collected.

More sophisticated explicit feedback systems incorporate multi-dimensional rating scales that capture nuanced aspects of model performance. Users might separately evaluate accuracy, helpfulness, relevance, tone, completeness, and safety. Some applications implement comparative feedback where users choose between multiple generated responses, providing relative quality signals that are particularly valuable for reinforcement learning from human feedback (RLHF). The key challenge is balancing the richness of information collected against user fatigue and abandonment—every additional click reduces completion rates.

Contextual feedback collection represents an evolution beyond static rating systems. These approaches present adaptive feedback requests based on detected uncertainty, controversial topics, or critical use cases. For instance, an LLM application might request detailed feedback only when confidence scores fall below a threshold or when the response contradicts retrieved information. Open-ended comment fields allow users to explain their ratings, providing qualitative insights that quantitative metrics miss. However, analyzing free-text feedback at scale requires additional NLP processing and often reveals that users struggle to articulate why a response fell short.

Implicit Feedback Signals: Mining User Behavior for Insights

While explicit feedback is valuable, implicit behavioral signals often provide more honest and comprehensive insights into user satisfaction. When users engage with LLM-generated content—copying it, applying suggestions, continuing conversations, or returning for follow-up queries—they signal approval more authentically than any button click. Conversely, immediate regeneration requests, rapid abandonment, or switching to alternative tools indicate dissatisfaction. These implicit signals create a continuous stream of feedback that doesn’t depend on user willingness to provide explicit ratings.

Analyzing session-level patterns reveals insights impossible to extract from isolated response ratings. Does the user accomplish their stated goal? How many iterations are required? Do they progressively refine prompts, suggesting initial outputs missed the mark? Time-on-page metrics, scroll depth, and engagement with embedded links all contribute to a holistic understanding of response quality. Advanced systems correlate these behavioral patterns with model characteristics, prompt structures, and user segments to identify specific failure modes and success patterns.

The challenge with implicit feedback lies in interpretation ambiguity. A user might copy LLM-generated code not because it’s excellent, but because it’s close enough to manually fix—a fundamentally different signal. Someone might abandon a conversation due to external interruption rather than dissatisfaction with the response. Sophisticated feedback systems address this by combining multiple implicit signals, establishing baseline behavioral patterns for individual users, and using explicit feedback as ground truth to calibrate behavioral interpretations. The goal is creating a composite feedback score that reflects actual user satisfaction more accurately than any single metric.

Closing the Loop: From Data to Actionable Improvements

Collecting feedback is only valuable if it drives tangible improvements, yet many organizations struggle with this crucial step. The first challenge involves feedback triage and prioritization. Not all negative feedback indicates problems requiring immediate action—some reflects user misunderstanding, edge cases, or requests for capabilities beyond the application’s scope. Effective systems categorize feedback into actionable buckets: bugs requiring immediate fixes, systematic performance issues suggesting model limitations, prompt engineering opportunities, knowledge base gaps in RAG systems, and feature requests for product roadmaps.

The implementation pathway varies depending on the improvement type. Short-term optimizations might include prompt refinements, adjusting temperature and sampling parameters, updating system instructions, or modifying retrieval strategies. These changes can be deployed rapidly and tested through A/B experiments where user feedback metrics serve as success criteria. Medium-term improvements involve curating high-quality training data from user interactions, fine-tuning models on domain-specific feedback, or implementing specialized modules for frequently problematic scenarios.

Long-term improvements require more substantial investment, potentially including model retraining or architecture changes. When feedback reveals systematic limitations—such as persistent factual errors in specific domains, inappropriate tone calibration, or reasoning failures on particular problem types—organizations must decide whether to invest in fundamental model improvements. This decision involves analyzing feedback volume, business impact, and technical feasibility. Crucially, organizations must establish feedback loops about the feedback loop itself, monitoring whether implemented changes actually improve user satisfaction metrics or create unintended consequences.

Transparency with users about how their feedback drives improvements builds trust and increases participation. Some applications display “improved based on feedback” indicators when users encounter updated responses, while others publish regular reports on changes implemented. This communication closes the psychological loop for users, demonstrating that their input matters and encouraging continued engagement with feedback mechanisms.

Advanced Feedback Loop Techniques and Emerging Practices

As LLM applications mature, feedback loop sophistication has advanced beyond basic rating systems. Active learning frameworks strategically request feedback on the most informative examples rather than randomly sampling user interactions. By identifying responses where the model exhibits high uncertainty, contradicts retrieved information, or falls near decision boundaries, these systems maximize learning value per feedback instance. This targeted approach reduces the total feedback volume needed while accelerating improvement in critical areas.

Another emerging practice involves personalized feedback loops that adapt models or retrieval strategies to individual user preferences and contexts. Rather than assuming all users share identical quality criteria, these systems maintain user-specific preference profiles built from historical feedback. One user might prioritize conciseness while another values comprehensive explanations; one might prefer formal tone while another appreciates conversational style. Personalized systems use feedback to calibrate these preferences and adjust response generation accordingly, creating experiences that feel increasingly tailored over time.

Adversarial feedback and red teaming represent proactive approaches where organizations systematically seek problematic model behaviors rather than waiting for users to encounter them. Dedicated teams attempt to elicit unsafe, biased, or low-quality responses, documenting successful attack vectors and feeding this information into safety training pipelines. This controlled feedback generation accelerates the identification of vulnerabilities before they affect real users, complementing organic user feedback with comprehensive stress testing.

Finally, federated and privacy-preserving feedback mechanisms address the tension between improving models and protecting user privacy. Techniques like differential privacy, secure multi-party computation, and on-device learning enable organizations to extract value from user feedback without centralizing sensitive data. These approaches become particularly important in regulated industries or applications handling confidential information, where traditional feedback collection might be prohibited. As privacy regulations tighten globally, privacy-preserving feedback loops will transition from optional enhancements to necessary infrastructure components.

Conclusion

Feedback loops transform LLM applications from static tools into continuously improving platforms that adapt to real-world usage patterns and evolving user needs. From simple thumbs-up buttons to sophisticated multi-dimensional evaluation systems, effective feedback mechanisms require thoughtful design balancing data quality, user experience, and privacy considerations. The true value emerges not merely from collecting feedback but from establishing robust processes that analyze signals, prioritize improvements, implement changes, and measure impact. As LLM applications become more prevalent across industries, organizations that master feedback loop implementation will differentiate themselves through superior user experiences, rapid adaptation to changing requirements, and sustained competitive advantage built on continuous learning and refinement.

How often should LLM applications be updated based on user feedback?

The update frequency depends on your application’s usage volume and criticality. High-traffic applications benefit from continuous monitoring with weekly or bi-weekly iterations for prompt refinements and monthly cycles for more substantial improvements. Critical enterprise applications might require more conservative update schedules with extensive testing. The key is establishing a consistent cadence that balances responsiveness with stability, while implementing robust A/B testing to validate that changes actually improve user satisfaction metrics.

What percentage of users typically provide explicit feedback in LLM applications?

Explicit feedback rates vary widely but typically range from 2-10% of interactions, with higher rates when feedback mechanisms are exceptionally low-friction or users encounter particularly good or bad experiences. This is why implicit behavioral signals are so valuable—they capture insights from the 90%+ of users who never click rating buttons. Combining both feedback types creates a more complete picture of user satisfaction and model performance.

Can feedback loops introduce bias into LLM applications?

Yes, feedback loops can amplify existing biases or introduce new ones if not carefully managed. Users providing feedback may not represent your entire user base, creating selection bias. Popular responses might receive more positive feedback simply due to familiarity, creating feedback loops that reinforce rather than improve outputs. Mitigate these risks by monitoring feedback demographics, actively seeking diverse perspectives, maintaining held-out test sets that aren’t influenced by user feedback, and implementing fairness metrics alongside performance metrics.